Evaluating 3DMOT under Latency

A novel evaluation metric for multi-object-tracking

In this project during my internship at BMW, I worked on a novel evaluation metric for multi-object-tracking under latency. This metric can be used to evaluate and benchmark the performance of MOT algorithms under latency in the context of autonomous driving.

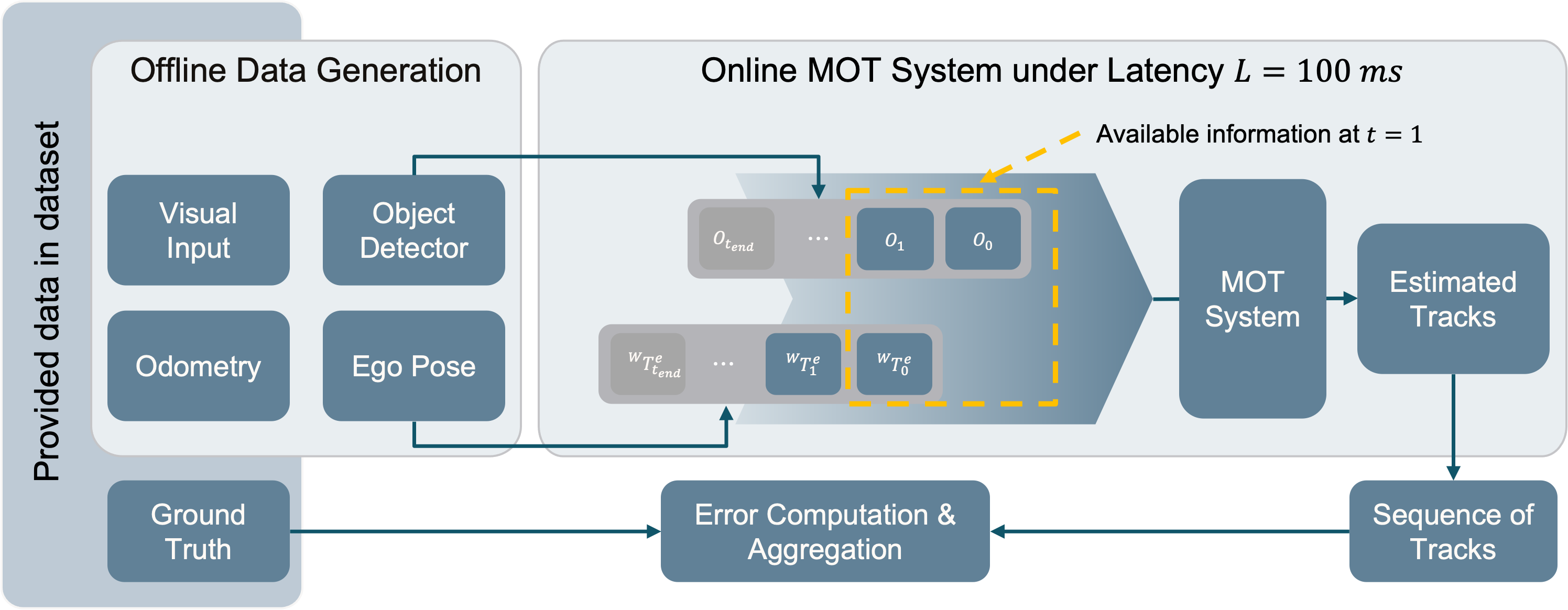

Latency in between input data streams for MOT systems (e.g. LIDAR and pose information) naturally occurs and is determined through various factors in sensor readout times and subsequent processing. Depending on how large the latency is, the performance of the MOT system can be significantly degraded. To simulate these effects, I developed an evaluation framework that allows me to mix and match different tracking pipelines and evaluate their performance under latency using data from the KITTI dataset:

Computing the errors of a MOT pipeline under latency can be tricky: first, we desynchronize the input of the data streams, then we run the tracking pipeline. To match the tracks estimated under latency to their ground truth, we need to align them in space and time. This alignment can effectively be done with the historic pose information of the ego vehicle and the tracks. Once we can match estimated tracks to their ground truth, we can compute and aggregate the state errors across all tracks and all frames.

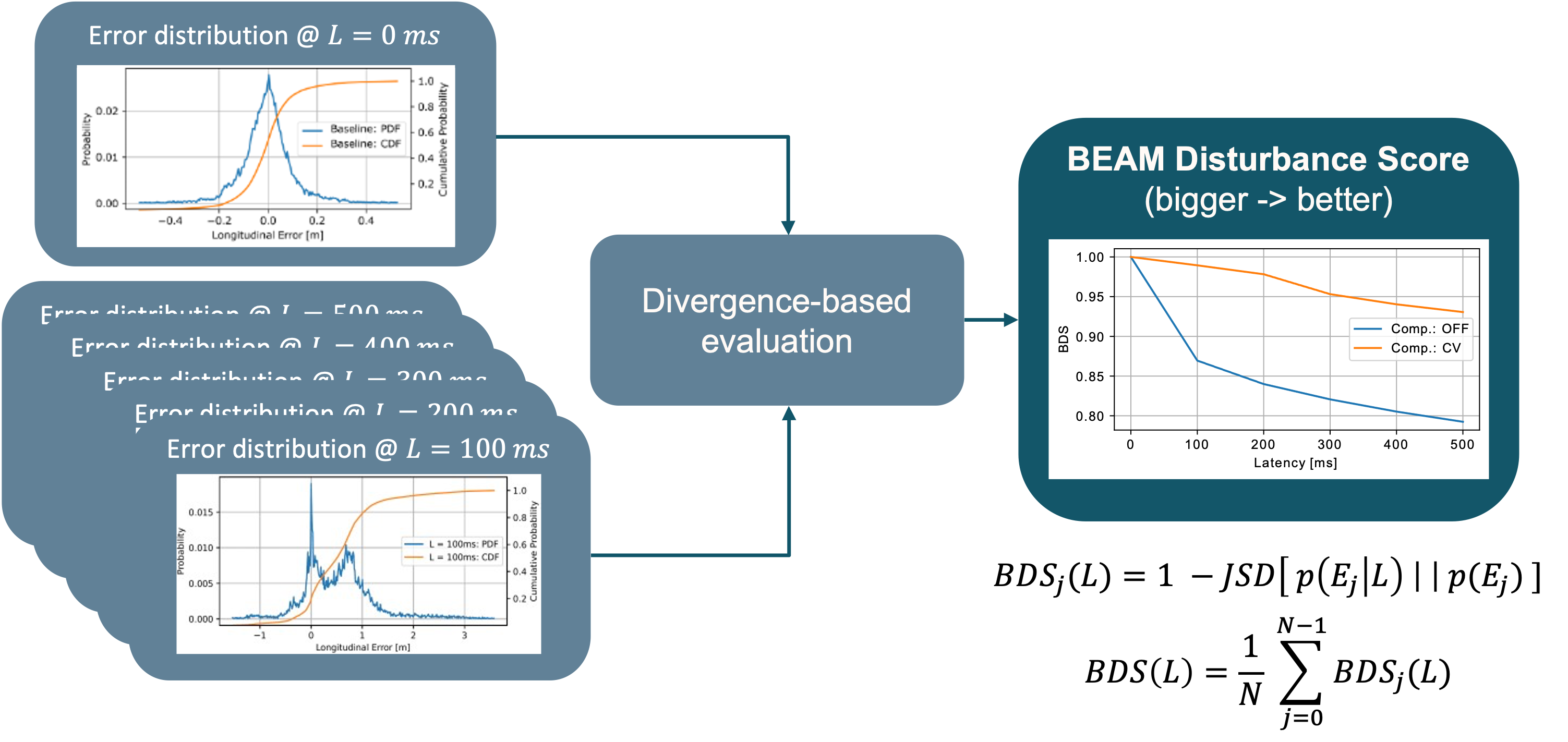

To evaluate performance degradation, we first need a baseline, which is the performance of the tracking pipeline without latency. We can then compute the performance degradation under latency by utilizing divergence measures such as the Kullback-Leibler divergence or the Jensen-Shannon divergence. In this case, the Jensen-Shannon divergence is used, as it offers some nice properties for interpretability over the KL divergence such as being bounded to [0, 1] when using base 2 logarithms.